6.01 Course Notes

4) Probability

Table of Contents

- 4) Probability

4.1) Introduction

Consider trying to drive through an unfamiliar city. Even if we are able to plan a route from our starting location to our destination, navigation can fail on two counts: sometimes we don't know where we are on a map, and sometimes, due to traffic or road work or bad driving, we fail to execute a turn we had intended to take.

In such situations, we have some information about where we are: we can make observations of our local surroundings, which give us useful information; and we know what actions we have taken and the consequences those are likely to have on our location. So, the question is: how can we take information from a sequence of actions and local observations and integrate it into some sort of estimate of where we are? What form should that estimate take?

We'll consider a probabilistic approach to answering this question. We'll assume that, as you navigate, you maintain a belief state which contains your best information about what state you're in, which is represented as a probability distribution over all possible states. So, it might say that you're sure you're somewhere in Boston, and you're pretty sure it's Storrow drive, but you don't know whether you're past the Mass Ave bridge or not (of course, it will specify this all much more precisely).

We'll start by defining probability distributions on simple and complex spaces, and develop a set of methods for defining and manipulating them. Then, we'll formulate problems like the navigation problem discussed above as state estimation problems in stochastic state machines. Finally, we'll develop an algorithm for estimating the unobservable state of system, based on observations of its outputs.

4.2) State spaces

We have been using state machines to model systems and how they change over time. The state of a system is a description of the aspects of the system that allow us to predict its behavior over time. We have seen systems with finite and infinite state spaces:

A basic enumeration of states, such as ('closed', 'closing', 'open', 'opening') for an elevator controller, we will call an atomic finite state space. The states are atomic because they don't have any further detailed structure.

A state space that is described using more than one variable, such as a counter for seconds that goes from 0 to 59 and a counter for minutes that goes from 0 to 59 can be described as having two state variables: seconds and minutes. We would say that this state space is factored: a state is actually described by a value of each of the two variables.

A state space described by a single integer is a countably infinite atomic state space.

A state space described by a real number is uncountably infinite, but still atomic.

A state space described by more than one integer or real number (or a combination of continuous and discrete state variables) is a factored state space.

In this chapter, we will concentrate on factored, finite state spaces, and see how to represent and manipulate probability distributions on them.

4.3) Probability distributions on atomic state spaces

Probability theory is a calculus that allows us to assign numerical assessments of uncertainty to possible events, and then do calculations with them in a way that preserves their meaning. (A similar system that you might be more familiar with is algebra: you start with some facts that you know, and the axioms of algebra allow you to make certain manipulations of your equations that you know will preserve their truth).

The typical informal interpretation of probability statements is that they are long-term frequencies: to say "the probability that this coin will come up heads when flipped is 0.5" is to say that, in the long run (as the number of flips goes to infinity), the proportion of flips that come up heads will converge to 0.5. This is known as the frequentist interpretation of probability. But then, what does it mean to say "there is a 0.7 probability that it will rain somewhere in Boston sometime on April 29, 2017"? How can we repeat that process a lot of times, when there will only be one April 29, 2017? Another way to interpret probabilities is that they are measures of a person's (or robot's) degree of belief in the statement. This is sometimes referred to as the Bayesian interpretation. In either interpretation, the formal calculus is the same.

So, studying and applying the axioms of probability will help us make true statements about long-run frequencies and make consistent statements about our beliefs by deriving sensible consequences from initial assumptions.

We will restrict our attention to discrete sample spaces1, so we'll let U be the universe or sample space, which is a set of atomic events. An atomic event is just a state: an outcome or a way the world could be. It might be a die roll, or whether the robot is in a particular room, for example. Exactly one (no more, no less) event in the sample space is guaranteed to occur; we'll say that the atomic events are "mutually exclusive" (no two can happen at once) and "collectively exhaustive" (one of them is guaranteed to happen).

- The sample space for a coin flip might be {H, T}, standing for heads and tails.

- The sample space for a sequence of three coin flips might be {HHH, HHT, HTH, HTT, THH, THT, TTH, TTT}: all possible sequences of three heads or tails.

- The sample space for a robot navigating in a city might be the set of intersections in the city.

- The sample space for a randomly generated document might be all strings of fewer than 1000 words drawn from a particular dictionary.

An event is a subset of U; it will contain zero or more atomic events.

An event in the three-coin-flip space might be that there are at least two heads: \{ HHH, HHT, HTH, THH\} .

An event in the robot navigation problem might be that the robot is within one mile of MIT.

An event in the document domain might be that the document contains, in sequence, the words '6.01' and 'rules'.

A probability measure \Pr is a mapping from events to numbers that satisfy the following axioms:

\Pr (U) |

= 1 |

\Pr (\{ \} ) |

= 0 |

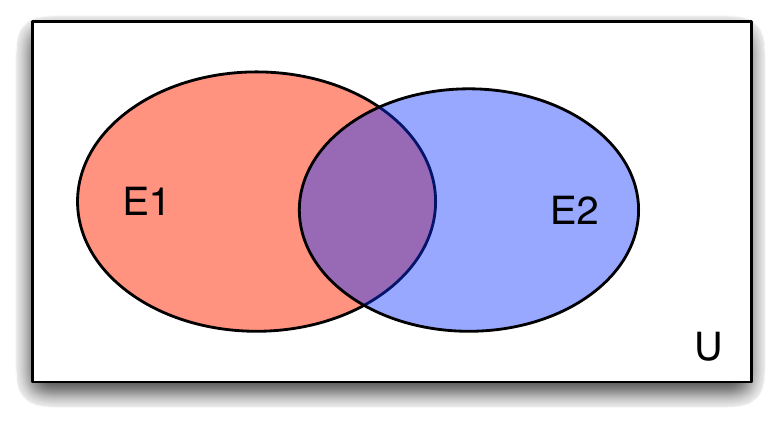

\Pr (E_1 \cup E_2) |

= \Pr (E_1) + \Pr (E_2) - \Pr (E_1 \cap E_2) |

Or, in English:

The probability that something will happen is 1.

The probability that nothing will happen is 0.

The probability that an atomic event in the set E_1 or an atomic event in the set E_2 will happen is the probability that an atomic event of E_1 will happen plus the probability that n atomic event of E_2 will happen, minus the probability that an atomic event that is in both E_1 and E_2 will happen (because those events effectively got counted twice in the sum of \Pr (E_1) and \Pr (E_2) ).

Armed with these axioms, we are prepared to do anything that can be done with discrete probability!

4.4) Conditional probability

One of the most important operations in probabilistic reasoning is incorporating evidence into our models. So, we might wonder what the probability of an event is given or conditioned on some other relevant information we have received. For example, we might want to know the probability of getting a die roll greater than 3, if we already know that the die roll will be odd.

We will write conditional probabilities as \Pr (E_1 \mid E_2) , pronounced "the probability of E_1 given E_2 ", where E_1 and E_2 are events (subset of the atomic sample space). The formal definition of conditional probability is this:

In the figure below, E_1 is the red ellipse, E_2 is the blue ellipse, and E_1 \cap E_2 is the purple intersection. When we condition on E_2 , we restrict our attention entirely to the blue ellipse, and ask what percentage of the blue ellipse is also in the red ellipse. This is the same as asking for the ratio of the purple intersection to the whole blue ellipse.

4.5) Random variables

Just as some state spaces are naturally described in their factored form (it's easier to say that there are 10 coins, each of which can be heads or tails, than to enumerate all 2^{10} possible sequences), we often want to describe probability distributions over one or more variables. We will call a probability distribution that is described over one dimension of a state space a random variable.

A discrete random variable is a discrete set of values, v_1 \ldots v_ n , and a mapping of those values to probabilities p_1 \ldots p_ n such that p_ i \in [0,1] and \sum _ i p_ i = 1 . So, for instance, the random variable associated with flipping a somewhat biased coin might be \{ \textit{heads}: 0.6, \textit{tails}: 0.4\} . We will speak of random variables having distributions: so, for example, two flips of the same biased coin are actually two different random variables, but they have the same distribution.

In a world that is appropriately described with multiple random variables, the atomic event space is the Cartesian product of the value spaces of the variables. So, for example, consider two random variables, C for cavity and A for toothache. If they can each take on the values T or F (for true and false), then the universe is pairs of values, one for each variable:

We will systematically use the following notation when working with random variables:

A : capital letters stand for random variables;

a : small letters stand for possible values of random variables; so a is an element of the domain (possible set of values) of random variable A ;

A = a : an equality statement with a random variable in it is an event: in this case, the event that random variable A has value a

Some non-atomic events in a universe described by random variables A and C might be C = c (which includes atomic events with all possible values of A ), or C = A (which includes all atomic events consisting of pairs of the same value).

4.5.1) Joint distribution

The joint distribution of a set of random variables is a function from elements of the product space to probability values that sum to 1 over the whole space. So, we would say that (C = c, A = a) (with a comma connecting the equalities), which is short for (C = c and A = a) is an atomic event in the joint distribution of C,A .

In most of our examples, we'll consider joint distributions of two random variables, but all of the ideas extend to joint distributions over any finite number of variables: if there are n random variables, then the domain of the joint distribution is all n -tuples of values, one drawn from the domain of each of the component random variables. We will write \Pr (A, B, \ldots , N) to stand for an entire joint distribution over two or more variables.

4.5.2) Conditional distribution

In Section 4.2 we gave the basic definition of conditional probabilities, in terms of events on atomic subspaces. Sometimes, it will be useful to define conditional probabilities directly. A conditional probability distribution, written \Pr (A \mid B) , where A and B are random variables (we can generalize this so that they are groups of random variables), is a function from values, b , of B , to probability distributions on A . We can think of it this way:

Here, we are using \lambda in the same way that it is used in Python: to say that this is a function that takes a value b as an argument, and returns a distribution over A .

4.6) Operations on random variables

Now that we can talk about random variables, that is, distributions over their sets of values, we can follow the PCAP principle to define a systematic way of combining them to make new distributions. In this section, we will define important basic operations.

4.6.1) Constructing a joint distribution

A convenient way to construct a joint distribution is as a product of factors. We can specify a joint distribution on C and A by the product of a distribution \Pr (A) and a conditional distribution \Pr (C \mid A) by computing the individual elements of the joint, for every pair of values a in the domain of A and c in the domain of C :

It is also true that

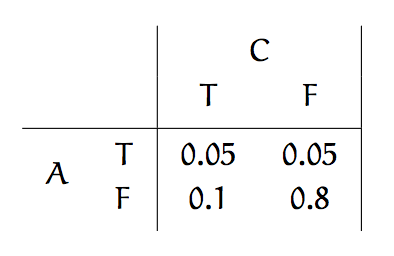

In the domain where the random variable C stands for whether a person has a cavity and A for whether they have a toothache, we might know:

The probability of a randomly chosen patient having a cavity:

\Pr (C) = \{ T: 0.15, F: 0.85\}The conditional probability of someone having a toothache given that they have a cavity:

\Pr (A \mid C) = \begin{cases} \{T : 0.333, F : 0.667\}, \text{if}~ C = T\\ \{T : 0.0588, F : 0.9412\}, \text{if}~ C = F \end{cases}

Then we could construct the following table representing the joint distribution:

The numbers in the table make up the joint probability distribution. They are assignments of probability values to atomic events, which are complete specifications of the values of all of the random variables. For example, \Pr (C=T, A=F) = 0.1 ; that is, the probability of the atomic event that random variable C has value T and random variable A has value F is 0.1 . Other events can be made up of the union of these primitive events, and specified by the assignments of values to only some of the variables. So, for instance, the event A = T is really a set of primitive events: \{ (A=T,C=F), (A=T,C=T)\} , which means that

which is just the sum of the row in the table.

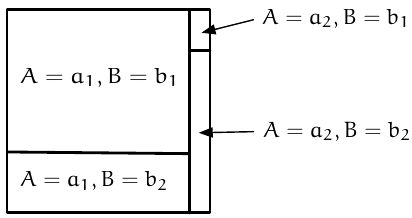

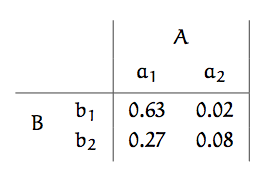

Here is another example of forming a joint distribution. Imagine that we are given the following distributions:

You can visualize the joint distribution spatially, like this:

The sample space is divided vertically according to the distribution \Pr (A) , and then, for each value of A , it is divided horizontally according to the distribution \Pr (B \mid A = a) . This joint distribution is represented numerically by the table:

We can also think of this joint distribution as just another regular distribution on a larger state space:

More generally, we can construct a joint distribution on an arbitrary number of random variables, \Pr (V_1, \ldots , V_ n) , as follows:

\Pr (V_1 = v_1, \ldots , V_ n=v_ n) = |

\Pr (V_1 =v_1\mid V_2 = v_2, \ldots , V_ n = v_ n) |

|

\cdot \Pr (V_2 =v_2\mid V_3 = v_3, \ldots , V_ n = v_ n) |

|

\ldots |

|

\cdot \Pr (V_{n-1} = v_{n-1} \mid V_ n = v_ n) |

|

\cdot \Pr (V_ n = v_ n) |

This can be done with the variables in any order.

4.6.2) Marginalization

A marginal distribution over any individual random variable can be obtained from the joint distribution by summing over all assignments to the other random variables in the joint distribution. In two dimensional tables, this means summing the rows or the columns:

\Pr (A) |

= \{ T : 0.1, F : 0.9\} |

\Pr (C) |

= \{ T : 0.15, F : 0.85\} |

Although you can compute the marginal distributions from the joint distribution, you cannot in general compute the joint distribution from the marginal distributions!! In the very special case when two random variables A and B do not influence one another, we say that they are independent, which is mathematically defined as

If we only knew the marginals of toothaches and cavities, and assumed they were independent, we would find that \Pr (C=T,A=T) = 0.015 , which is much less than the value in our joint distribution. This is because, although cavity and toothache are relatively rare events, they are highly dependent.

4.6.3) Conditioning

One more important operation on a joint distribution is conditioning. It is fundamentally the same operation as computing a conditional probability, but when the conditioning event (on the right hand side of the bar) is that a random variable has a particular value, then we get a nice simplification. So, for example, if we wanted to condition our joint toothache/cavity distribution on the event A = T , we could write \Pr (C,A \mid A = T) . But since the value for A is already made explicit in the conditioning event, we typically write this as \Pr (C \mid A = T) . It is obtained by:

Finding the row (or column) of the joint distribution corresponding to the conditioning event. In our example, it would be the row for A = T , which consists of \Pr (A = T, C = T) and \Pr (A = T, C = F) .

Dividing each of the numbers in that row (or column) by the probability of the conditioning event, which is the marginal probability of that row (or column). In our example, we would divide 0.05 by 0.1 to get

\Pr (C \mid A = T) = \{ T: 0.5, F: 0.5\}

So, in this example, although a cavity is relatively unlikely, it becomes much more likely conditioned on knowing that the person has a toothache.

We have described conditioning in the case that our distribution is only over two variables, but it applies to joint distributions over any number of variables. You just have to think of selecting the entries whose value for the specified random variable equals the specified value, and then renormalizing their probabilities so they sum to 1.

4.6.4) Bayesian reasoning

Frequently, for medical diagnosis or characterizing the quality of a sensor, it's easiest to measure conditional probabilities of the form \Pr (\textit{Symptom} \mid \textit{Disease}) , indicating what proportion of diseased patients have a particular symptom. (These numbers are often more useful, because they tend to be the same everywhere, even though the proportion of the population that has disease may differ.) But in these cases, when a patient comes in and demonstrates some actual symptom s , we really want to know \Pr (\textit{Disease} \mid \textit{Symptom} = s) . We can compute that if we also know a prior or base rate distribution, \Pr (\textit{Disease}) . The computation can be done in two steps, using operations we already know:

Form the joint distribution: \Pr (\textit{Disease}, \textit{Symptom})

Condition on the event \textit{Symptom} = s , which means selecting the row (or column) of the joint distribution corresponding to \textit{Symptom} = s and dividing through by \Pr (\textit{Symptom} = s) .

So, given an actual observation of symptoms s , we can determine a conditional distribution over \textit{Disease} , by computing for every value of d ,

The formula, when written this way, is called Bayes' Rule, after Rev. Thomas Bayes who first formulated this solution to the 'inverse probability problem,' in the 18th century.

Multiple pieces of evidence

With Bayes' rule, we have seen how to update a probability distribution P(A) based on receiving some evidence E = e to get a new distribution P(A \mid E = e) . Now, what happens if we get yet another piece of evidence F = f ?

If the two pieces of evidence are conditionally independent given A , that is, that P(F \mid A, E) = P(F \mid A) and P(E \mid A, F) = P(E \mid A)then we can incorporate the evidence sequentially. That is, we can:

Use Bayes' rule to incorporate the first piece of evidence to get P(A \mid E = e) ; then

Use Bayes' rule again, with P(A \mid E = e) as the starting distribution on A to incorporate the second piece of evidence and get P(A \mid E = e, F = f) .

And the totally cool thing is that it doesn't matter what order we do this in! We'll get the same result.

First, let's derive a small generalization of Bayes' rule, which applies when we are conditioning on more than one variable.

which, when E and F are conditionally independent given A is

So, now, let's think about updating a distribution on some random variable of interest A , given two pieces of evidence: E = e and F = f . (It might be that we're modeling our information about a disease someone has (A ) given the results of two tests (E = e and F = f ). We can start by applying the rule we derived above:

And now, we can take the term P(A \mid F = f) and apply Bayes' rule to it, inside this formula, to get

This is looking pretty promising. We assume we know how evidence variables E and F depend on A , and we certainly have the prior P(A) . So we just have to play around with the denominator a little, to get

Now, we can gather up the terms slightly differently to reveal a sequential update structure:

Clearly, the first factor in the right-hand side is the Bayes update of P(A) based on evidence E = e . The second factor takes that distribution, and does another Bayesian update, this time based on the evidence that F = f . The second denominator might cause some confusion. We can think about the denominators in two ways: a careful way and a relaxed way.

The careful way is to remember that P(E = e) = \sum _ a P(E=e\mid A = a)P(A = a) , which we can easily compute as the sum of the numerator terms of the first factor for all values of a . And, similarly,

which can be rewritten as

The cool thing to note is that this is just the sum of products of the the numerator terms, once we cancel P(E = e) .

The relaxed way is to note that neither denominator term depends on the particular value of a we are interested in: from the perspective of making a distribution over A , these are just constants, and we can pick them to make it so that the distribution sums to 1. This all boils down to normalizing the distribution after each evidence update.

So, we can see that to compute P(A \mid E = e, F = f) we just need to compute P(A \mid E = e) using Bayes rule, then take that distribution, and apply Bayes rule again to get.

Convince yourself that if we did the updates in the other, first based on F = f and then based on E = e , we would get the same result.

Write a method of DDist called multiBayes that assumes self is a representation of some distribution P(A) , and takes as an argument a list of n pairs, each of which has the form (P(E_ i \mid A), e_ i) , representing several pieces of evidence, and returns a distribution representing P(A \mid E_1 = e_1, \ldots , E_n = e_n) .

This is sometimes called recursive Bayesian updating. For fun, do it recursively!

Verify that if you change the order of presentation of evidence that the resulting distribution is the same.

4.6.5) Total probability

Another common pattern of reasoning is sometimes known as the law of total probability. What if we have our basic distributions specified in an inconvenient form: we know, for example, \Pr (A) and \Pr (B \mid A) , but what we really care about is \Pr (B) ? We can form the joint distribution over A and B , and then marginalize it by summing over values of A . To compute one entry of the resulting distribution on B , we would do:

but it's easier to think about as an operation on the whole distributions.

Footnotes

1In probability, it is typical to talk about a 'sample space' rather than a 'state space,' but they both come to the same thing: a space of possible situations.